Apple is offering a reward of up to $1 million to individuals who can successfully hack its new fleet of AI-focused servers, which are set to launch next week for Apple Intelligence. These servers, known as “Private Cloud Compute,” will handle complex AI tasks that cannot be processed on Apple devices. To address privacy concerns, Apple has designed the servers to immediately delete user requests once the task is completed. The system also features end-to-end encryption, ensuring that Apple cannot access user requests. However, the company is inviting the security community to test the privacy claims of Private Cloud Compute. Initially, only a select group of researchers were invited, but on Thursday, Apple opened the opportunity to the public. The company is providing access to the source code for key components of Private Cloud Compute, as well as a “virtual research environment” for macOS to run the software. A security guide with technical details about the server system is also available. In addition, Apple has expanded its Security Bounty program to include rewards for vulnerabilities that compromise the fundamental security and privacy guarantees of Private Cloud Compute. These rewards range from $250,000 for remotely exposing a user’s data request to $1 million for remotely executing rogue computer code with privileges. Lower rewards will be given for discovering how to attack Private Cloud Compute from a privileged network position.

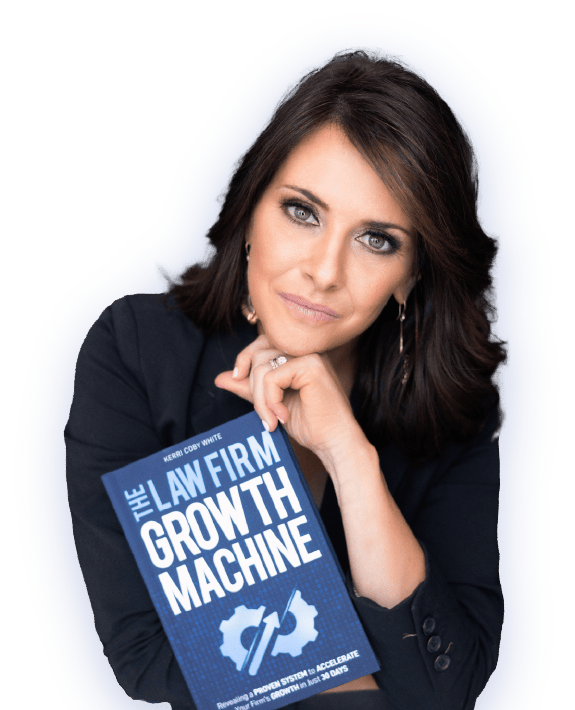

Why Intake Is More Than Just a Phone Call: The Heart of Your Client Relationships

< 1 minuteIn the bustling world of law firms, where efficiency is prized and caseloads are heavy, it’s tempting to view intake